Is Lovable.dev SEO Friendly? A Guide to Getting Your Site Ranked

Lovable AI which is main coding engine of Lovable.dev is good at a lot of things but because of the site architecture it uses, it lacks fundamental SEO best practices.

Lovable.dev is good at a lot of things. It excels at building nice-looking UIs with a prompt in minutes and creating basic functioning apps with Supabase or Lovable Cloud. However, SEO is not one of Lovable's strong suits.

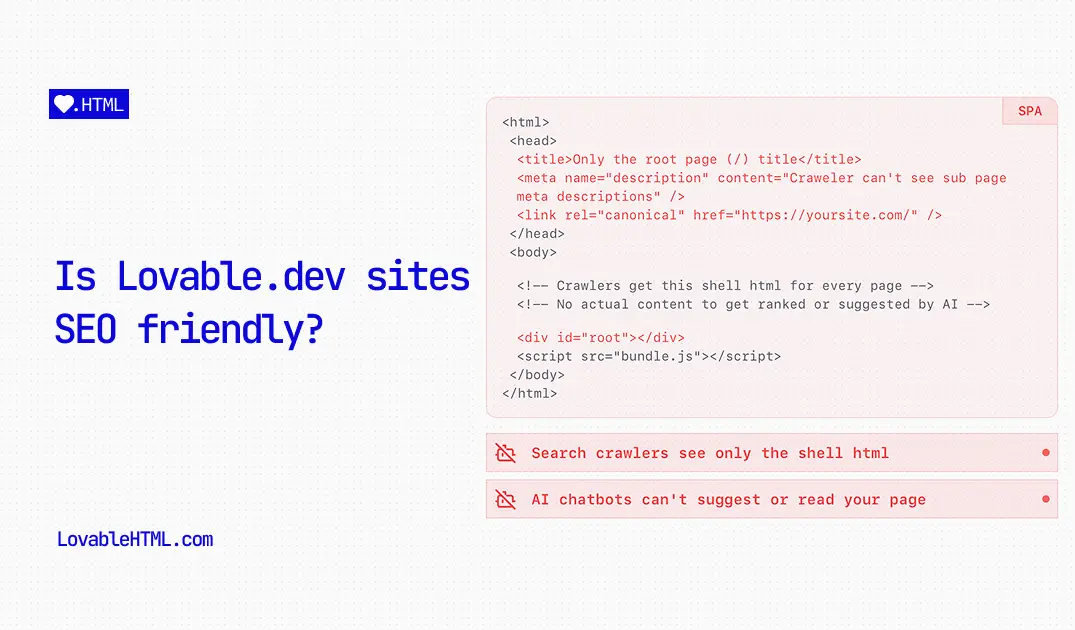

For a site or a page to rank for the keywords included in its content, it has to be fully crawlable by search engines. Since Lovable can only build client-side rendered, React Single Page Applications (SPAs), your content is only available when visited in a browser. This is because SPAs use JavaScript to render content. Most search engine crawlers either will not wait for the JavaScript to execute and load the content, or they do not crawl JavaScript-rendered pages at all, only receiving the basic shell from your index.html file.

Popular myths

If you type in on reddit "Lovable SEO", you will see a bunch of discussions of people trying to find solutions to this exact problem. Some people are realizing that it's not possible to fix and others, for whatever reason, proud to claim that they have found this magic prompt that fixes all your SEO problems. So I decided to take on this job of verifying if those claims are legit or misleading.

"You can prompt Lovable to do it".

This one is easy to verify. Just run the prompt they claim to have found fix your issues and do a curl or crawler simulator test on your site after, you find out that it's straight BS and your page still renders <div id="root"></div> only.

You cannot change Lovable.dev made website to support static site generation or server

side rendering with just prompting, does not matter how nicely you ask it. These

magic prompts people claim to have found can not do anything more

than adding meta tags and JSON+LD schemas. Lovable does not allow any kind

of modification to React SPA sites it makes. Even if you try and port your code over to github and

change it manually to use vite-ssg or Next.js for example, your Lovable preview breaks and

you can no longer make changes to your site using it or publish your changes. Same goes for if you modify anything in your

build command in package.json or build config in vite.config.ts files. Your

site will fail to publish and will not render the preview, it just stops working. Lovable.dev

can only build React single page applications and that's it.

"Crawlers can easy crawl SPAs and execute JavaScript".

That's not true. Only some crawlers execute JavaScript but slow, within limited time and it is not guaranteed. Running headless browsers which is essentially a crawler is not a cheap compute task. Most crawlers execute JavaScript slower than your typical user browser so crawler may not execute complex JavaScript logic or wait until all page content finished rendering before the Googlebot times out and leaves. This causes flaky content with your internal links and page content are read fully or not loaded at all.

"All my pages are indexed on Google.".

This is a common misconception as well. Pages indexed does not equal your content is indexed. When you submit your sitemap on search console, Googlebot will visit all urls submitted in there. If it sees some kind of content, it will mark the url indexed. Google now knows url exists. What it does not know however is your content in its full form, keywords your content may rank for or your internal links. If you are not serving your pages in static HTML and leaving rendering your content up to crawlers of search engines and AI, quality of indexed content from your site is not guaranteed to be exact same as you see in your browser.

What's the solution, then?

There are 2 ways I found consistently work while trying to fix this for my freelance clients of my solo fractional software development agency.

- Migrate off of Lovable to use another framework that supports server side rendering.

- Use a pre-rendering proxy to serve plain HTML version of your site to crawlers.

Solution 1: Migrate the Lovable Site to SSR or SSG

This solution is to migrate your site from Client-Side Rendering (CSR) to Server-Side Rendering (SSR) or Static Site Generation (SSG). This is a technical process and requires some programming knowledge to debug potential issues.

There is a big caveat, however. After the migration, you can no longer use Lovable to make changes. Since Lovable only supports single-page applications, your site will no longer work in the Lovable editor: you cannot see previews, and Lovable AI's code quality will drop significantly since it is not trained on that kind of codebase.

Solution 2: Use a Prerender Proxy

This problem is not new; it has existed since the introduction of ReactJS in 2014. Solutions from back then still work for this use case. A prerender proxy intercepts requests to your site from crawlers and serves them a pre-rendered, static HTML version of your page.

There are two main providers of this service:

-

Prerender.io: One of the older players in the industry, often used by legacy codebases. It requires code to set up, and your site has to be connected to Cloudflare. Pricing starts at $49/month.

-

LovableHTML: A more recent player and a no-code alternative to Prerender.io. It is specifically built for AI website builders and is more commonly used by non-technical people because of its no-code nature. It starts at

$9/monthand supports AEO (Answer Engine Optimization), a more recent trend in search.

How does pre-rendering work?

When your site is connected to one of the pre-rendering solutions like LovableHTML.com, it will pre-render all your pages defined in your sitemap into plain HTML/CSS and store them, ready for search or AI crawler requests come in. When crawlers visit your site looking for content, instead of the shell html (the root div), they will receive the already pre-rendered, plain HTML/CSS version of your pages with all your content in it, ready to rank for keywords and to be read by AI chatbots.

Which in turns boosts your traditional search and AEO rankings.

What's an SPA (Single Page Application)?

A Single Page Application (SPA) is a website or web application that loads a single HTML page and then dynamically updates the content as a user interacts with it. Instead of loading entirely new pages from the server for each action, it rewrites the current page with new data. This creates a faster, more fluid user experience that feels similar to a desktop application. Frameworks like React, which Lovable.dev uses, are primarily used to build SPAs.

What does AEO stand for?

AEO stands for Answer Engine Optimization. It is an evolution of traditional SEO. While SEO focuses on ranking for keywords, AEO focuses on optimizing content to provide direct answers to user questions. The goal is to have your content featured in rich results like Google's "People Also Ask" boxes, featured snippets, voice search answers (from assistants like Siri or Alexa), and in the responses of AI chatbots.

What's AJAX SEO?

AJAX SEO refers to the set of practices and challenges related to making content loaded with AJAX (Asynchronous JavaScript and XML) crawlable and indexable by search engines. This is essentially the predecessor to the modern SPA/SEO problem. In the past, Google even supported a specific "hash-bang" #! URL scheme to help its crawlers find and render AJAX-driven content, though this method is now deprecated and favor of solutions like pre-rendering or server-side rendering.

How can I check if my site is an SPA or if it is crawlable?

You can test how a search engine crawler sees your site by using our free crawler simulator or a via your terminal using the curl command. To use the curl command, open a terminal (on macOS or Linux) or a command prompt with curl installed (on Windows) and run the following command, replacing https://your-website.com with your site's URL:

curl -i -A "Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)" -H "Accept: text/html" https://your-website.com

After running the command, inspect the HTML output in your terminal.

- If you see your page's text content, headings, and links within the HTML, your site is being served in a way that is crawlable.

- If you see a mostly empty <body> tag with just a

<div id="root"></div>with no content in them exceot a few <script> tags, it confirms your site is a client-side rendered SPA and crawlers are likely not seeing your content.